2.1 The main services models of cloud computing

One of the first questions to ask before a cloud migration: where should one place the workload?

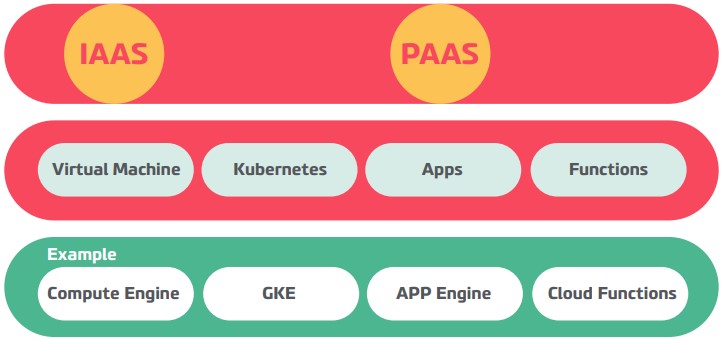

And the cloud offers three types of services:

- IaaS – Infrastructure as a Service

- PaaS – Platform as a Service

- SaaS – Software as a Service

The main services models of cloud computing

The first service offered by the cloud is the traditional virtual machine service, despite some differences, this is a service very similar to what clients have in their own data centre using technologies such as Kernel-based Virtual Machine (KVM), VMware, among others. In other words, the cloud offer is based on the allocation of traditional vCPU, memory, and disk resources.

IaaS is beneficial for most organisations, it allows complete control over the infrastructure and is based on a payment model according to usage, companies pay for what they allocate, giving them access to support, and the option of scaling resources to suit their needs.

In Google Cloud, this offer is known as Google Compute Engine (GCE), this service provides virtual machines housed in Google’s infrastructure to take advantage of its global fiber network infrastructure. GCE offers a flexible pricing model, with second billing, automatic discounts and commitment discounts,allowing you to create custom machines according to your specific needs.

Platform as a Service (PaaS)

The second service offered by the cloud is ideal for companies who want to migrate workloads without the responsibility of managing the infrastructure that supports these workloads.

In this service, the Cloud is responsible for managing and maintaining the infrastructure that supports the execution of the workload.

PaaS allows companies to focus on developing applications, relieving them of

management tasks, software updates or security patches.

App Engine is Google Cloud’s offer for this type of service, the user only has to add his code and all the infrastructure preparation is done automatically by Google.

Another offer is Cloud Functions. This offers an even larger abstraction than the App Engine, it allows users to create functions that are triggered by cloud events, and issues such as scaling or setting application runtime parameters are automatically managed by the service. Given the versatility and particularity of these services, it is also characterised by FaaS (Function as a Service).

Kubernetes has become the standard of orchestration of container systems. Currently, the largest cloud providers offer the kubernetes service, this offer can be in IaaS, hybrid or PaaS.

Cloud Run is Google Cloud’s offer for the service of containers as PaaS. Cloud Run allows the user to run their containers, abstracting away all management of a kubernetes cluster, so the only concern is the creation and execution of the container, all the infrastructure that allows for the execution is made by Google.

GKE or Google Kubernetes Engine is a hybrid offer between Iaas and PaaS of Kubernetes, which allows the user to define their cluster, yet the setup and maintenance is done by Google.

And finally, the user will be able to instantiate their own kubernetes cluster in the Google Computer Engine (GCE) service, i.e. a customised setup of kubernetes according to their own requirements.

Software as a Service (SaaS)

The third and final service is the Software as a Service (SaaS), commonly referred to as a software service made available by third parties.

SaaS platforms make software available to users over the Internet, usually according to a monthly subscription.

Given the nature of SaaS, there is no notion of workload migration, the software is made fully available by the service provider, the user will only have to migrate the data.

An example of this offer from Google is Gmail, a server for business e-mail. In a migration from a local e-mail server to Gmail, only the information is migrated, which means, only the data will be migrated, the components that supported the local email service, such as the server are not migrated due to the fact that this service is completely provided by Google as SaaS.

2.2 The relationship between the Cloud Provider and the Client: a model of shared responsibility

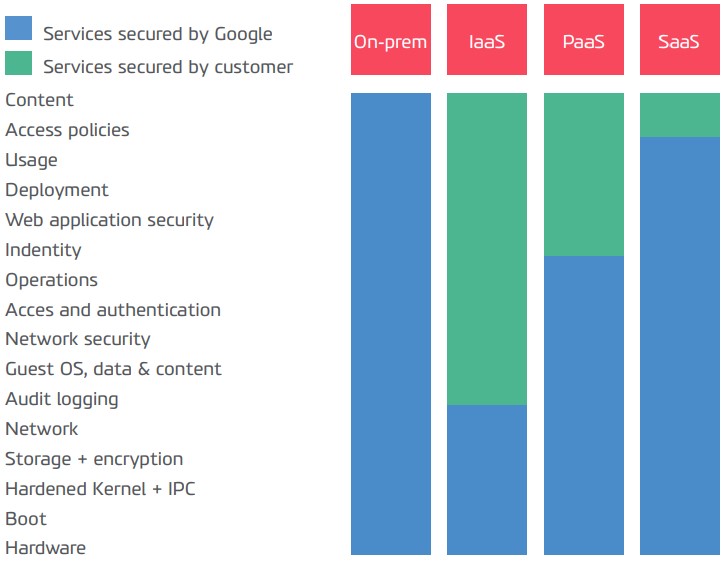

One of the points to consider when migrating to the Cloud is the shared responsibility model between the Cloud Provider and the Client, as shown in graph X.

This model establishes, upon the type of service chosen, which components are the responsibility of the user and which are the responsibility of the Cloud Provider, in this case, Google Cloud.

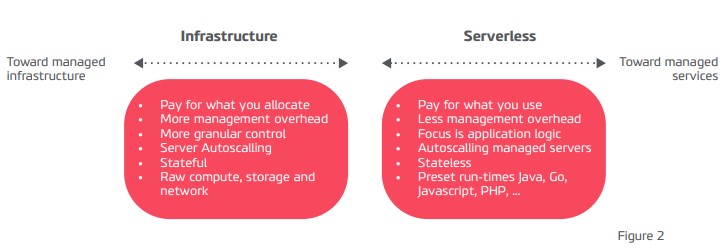

The model establishes that, as we move from so-called infrastructure services to more serverless services, the Cloud Provider is responsible for managing stack components. On the other hand, we can infer that by opting for more serverless services we lose control and access to stack components that could be crucial in a workload execution.

Figure 2 demonstrates this trend by making a parallelism between the various services in light of two macro contexts: infrastructure and serverless.

2.3. Cloud Rationalisation

After looking at the different service models, it is necessary to analyse which strategy is best to adopt when migrating the workload to the Cloud. This workload assessment process is done in an effort to determine the best way to migrate or modernise each workload, it is typically called Cloud Rationalisation.

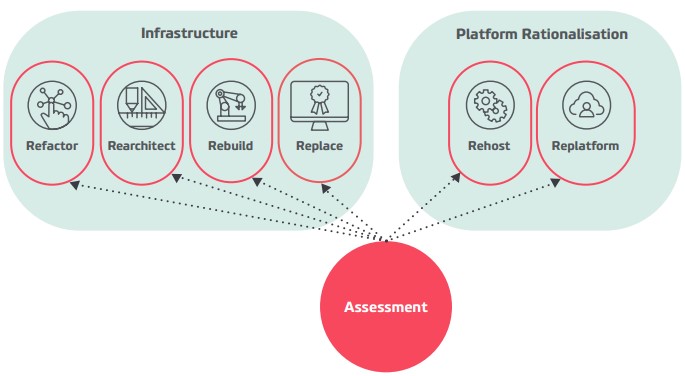

The Cloud Rationalisation process is made up of six R’s that can be grouped into two distinct approaches: the first «Platform Rationalisation» focuses on system migration from the workload infrastructure point of view, while the second «Modernisation» focuses on the application component in application transformation.

The main focus of the «Application Modernisation» approach is the application, so we won’t go into much detail with the underlying R’s. Essentially, Refactor, Rearchitect, Rebuild and Replace aim to convert and/or migrate data and applications to make use of cloud computing, a process formally referred to as Cloudification. Next, we will present each one, starting with those that could potentially imply a minor change in the application to the replacement strategy from one application to another.

- Refactor – Directly related to PaaS services, the purpose of this is to develop processes that allow decomposition of the application into various services in order to fit it into PaaS services.

- Rearchitect – The objective of this strategy is to redefine the architecture of the application so that it is compatible with the offer from the Cloud Provider. In many cases both Refactor and Rearchitect strategies are complementary.

- Rebuild – In some cases, the migration of the application to the cloud may not be possible, this could be for several reasons (e.g. technology, complexity), therefore the best strategy is to rebuild the application from scratch.

- Replace – This strategy aims to replace the application with an application in a SaaS model.

- Rehost – Usually called «Lift and Shift», this strategy aims to move a workload to a Cloud with minimal change to the application architecture. A practical example of a «Lift and Shift» is the migration of a «LAMP» system (Linux, Apache, Mysql, Php) in a virtualised VMWare environment to the Cloud. A simple implementation would be to move the virtual disks of the machine, convert them to be able to boot the virtual machine and make the necessary configuration changes that would allow access to the application. Despite being simple, this approach brings enormous challenges and some associated risk, hence the introduction of process automation tools such as Velostrata. The use of Velostrata allows, in an automated way, the creation of resources in the Cloud and the necessary conversions, between the two systems, reducing the time of unavailability of the system due to the fact that the migration of the data is carried out in the “background”.

- Replatform – Also referred to as «Lift-Thinker-and-Shift», this strategy is very similar to the previous one however, the main objective is to take advantage of existing services in the Cloud for the components that are used by the application to migrate. Going back to our «LAMP» example, one of these components is the Mysql database, which in the case of the GCP is an offer from the SQL Cloud. Instead of migrating this component as a virtual machine, the database would be migrated to the Cloud SQL, thereby taking advantage of a Cloud-managed service (according to the responsibility model) and freeing the user from tasks such as deploying backups and managing the database.

Platform Rationalisation

- Pros: Requires little initial investment in the migration process • Quick to migrate and implement

- Cons: The Application can’t take full advantage of the Cloud features and benefits • The cost of running the Cloud. Application may be higher.

Application Modernisation

- Pros: Applications take full advantage of Cloud features and benefits • Running the Application on the Cloud is much more cost-effective

- Cons: ARequires some initial investment in the migration process, and in general, consumes a lot of time and a lot of resources

Returning to our initial question – Where to put the workload? There is no direct answer, the answer will always depend on the analysis of the application, the determination of its maturity for the Cloud and the level of control of the infrastructure that enables the running of the workload.

Google Cloud provides some key elements in its online documentation, which may help in this choice, based on various use cases, as well as their recommended services (https://cloud.google.com/hosting-options/).

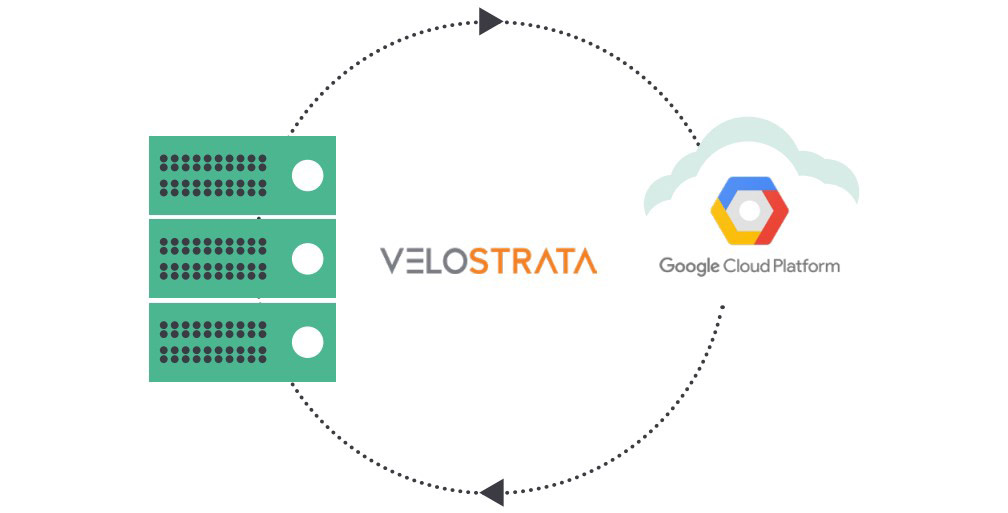

2.4 Velostrata

Velostrata is a tool used to migrate workloads between On-Prem/AWS environments and the Google Cloud Platform (GCP). Velostrata stands out for having the ability to decouple the storage component from the workloads compute component, thus allowing you to have a VM running in the Cloud while the storage component remains on the side of the On-Prem environment. The mechanism of synchronisation between the two environments allows the data to be continuously written, therefore there is no degradation of the service. At any time the storage component can be migrated to the cloud environment with a total migration of the workload to the Cloud. Conversely, there is the ability to return the workload to the source environment (On-Prem or AWS) without data loss. This ability to decouple the two components, storage and compute, allows the Client to have the opportunity to test and enjoy the numerous advantages that a Cloud environment provides, in a fast, agile and safe way

2.5 GCP

Google is one of the main leaders in software and technology development around the world. The demand for Cloud services is increasingly becoming a standardised norm for professionals and consumers, bringing about the need to innovate more and more in the solutions and services to be made available.

The Google Cloud Platform stands out for its global network, low latency, security, leadership in the innovation of big data tools, investment in open-source technologies, simplicity in the utilisation, the creation of new applications and/or modernisation of those already existing. The global network allows the allocation of services and resources by zone, region, or in multiple regions. Multi-regional services allow redundancy and distribution between these regions.

This platform includes a vast number of services divided into various types such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS). Compute Engine (virtual machines), Storage and Databases, Networking, Big Data, Machine Learning, Identity & Security and Management & Developer Tools should be highlighted. These can be used in isolation or combined according to the needs of professionals, allowing for the customisation of their own applications and solutions.

2.6 Best Practices

Avoid «One Size Fits All» approaches.

Running workloads on virtual machines, in our case on the GCE, is a valid option but with this approach, you will not take advantage of all the valences of the Cloud, nor the benefits provided by the services managed by it.

On the other hand, we can also add, although it is a trend, running workloads in containers may not be the best option when migrating to the Cloud. Converting a workload into microservices could bring enormous complexity, not only from the architecture point of view but also with simple troubleshooting tasks.

Therefore a careful analysis of each workload should be carried out and one should evaluate the benefits of implementation in various systems, in a logic where each workload is considered unique

Establish a path for the migration of a workload to the Cloud.

This can be a time-consuming and somewhat complex process. For the success of a migration it is crucial to establish a well-defined plan with feasible and tangible goals.

It is important that you prioritise and categorise your workloads. Thus, it is essential that you make a detailed analysis of each workload and catalogue them according to their complexity, dependencies and the relevance it has to the business.

Carefully choose which one will be your first workload to migrate.

The first migration is crucial for the motivation and success of the process. Choose a workload that does not present great technical complexity but rather one that is relevant from a business point of view.

Automate as much as you can.

Automating the migration process is a guarantee of robustness with less

susceptibility to failure. Tools such as Velostrata can significantly leverage the

migration process and mitigate the associated risk.

Optimise the process continuously.

In each interaction of the migration process, take some time to optimise the workload migrated to the Cloud. There is always room for improvement and small adjustments can have significant impacts on the performance of your Workload.